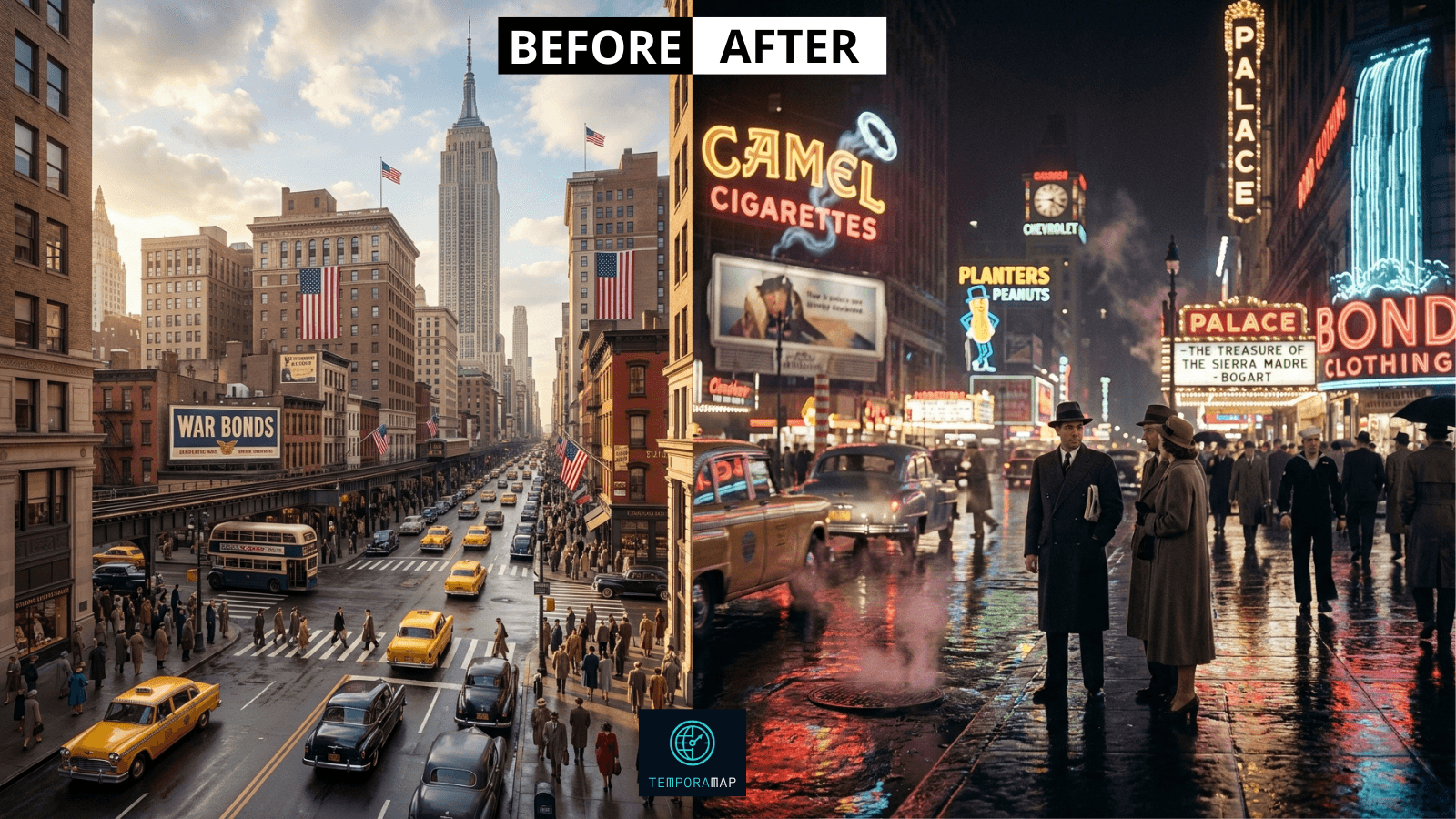

How TemporaMap Improved AI Image Quality Using Structured Prompts and Natural-Language Reconstruction

Improving AI image generation quality often requires more than simply adding details to a prompt. During recent experiments at TemporaMap, it became clear that how a prompt is structured can significantly impact the final output, far more than the amount of information included.

This article covers a workflow that significantly increased output consistency and quality:

Structured prompt generation → JSON schema → natural-language reconstruction → final prompt.

These insights may be helpful for anyone working with generative AI, prompt engineering, or historical scene reconstruction.

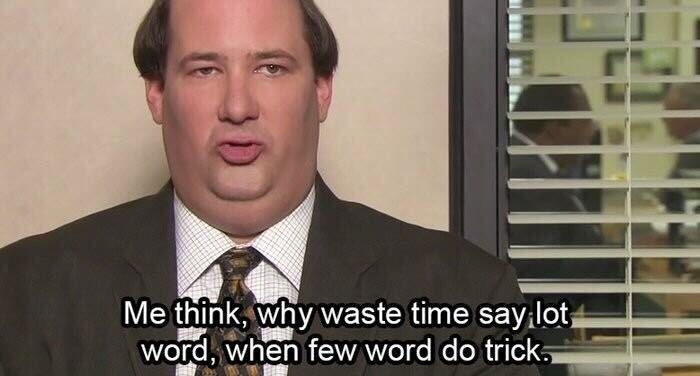

Why Fewer Words Can Lead to Better Results

One of the core learnings from TemporaMap’s experiments is that shorter, clearer prompts often outperform long, overly specific ones.

Initial prompts contained more than 30 lines of instructions. After restructuring them down to around 11 lines, focusing on camera settings, lighting, constraints, and style rather than raw scene details, the output quality improved dramatically.

The conclusion is simple:

Quality of instruction > Quantity of instruction.

The Challenge: Unknown Scene Context

TemporaMap faces a unique challenge:

The system does not know the scene context in advance.

Details like location, year, environment, and architectural style depend entirely on the user’s chosen pin on the map. Because of this, creating a single static “perfect prompt” is impossible.

To ensure accuracy, the prompt must be generated dynamically.

Using Gemini as an On-Demand Prompt Engineer

To solve this dynamic prompt-generation problem, TemporaMap uses Gemini 3 as an intelligent prompt engineer.

Gemini receives map context and produces a deeply structured prompt in JSON form, containing:

- Rendering instructions

- Location details

- Scene descriptions

- Camera and perspective info

- Image quality settings

- Lighting information

- Environmental details

- Color grading guidance

- Project constraints and rules

A typical JSON output looks like this:

{

"rendering_instructions": "...",

"location_data": { ... },

"scene": { ... },

"camera_and_perspective": { ... },

"image_quality": { ... },

"lighting": { ... },

"environment_details": { ... },

"color_grading": { ... },

"project_constraints": { ... }

}

The Problem: Models Struggle With Deeply Nested JSON

Although the JSON structure is clean, organized, and highly descriptive, large hierarchical prompts caused issues when sent directly to the final image model (such as NanoBanana).

The model would:

- Ignore deeply nested fields

- Misinterpret lens and camera instructions

- Produce “rubbery” or overly smoothed textures

- Apply incorrect depth of field

- Render odd or inconsistent angles

- Overlook stylistic or lighting constraints

In short, the structure was too complex for the model to prioritize effectively.

The Solution: Rewriting Structured JSON Into Natural Language

The breakthrough came from a simple shift:

Instead of sending JSON directly to the model, TemporaMap now parses the JSON and rewrites it into a clean, human-like natural-language prompt.

This process flattens the structure while keeping all context intact.

Workflow:

→ Gemini generates structured JSON

→ TemporaMap parses the JSON

→ TemporaMap rewrites each field into natural-language text

→ Final prompt is sent to the image model

This instantly improved:

- Scene accuracy

- Lighting realism

- Color consistency

- Camera and lens correctness

- Overall image quality

- Historical coherence

A simplified example of the final prompt format:

CAMERA: ...

LOCATION: ...

COMPOSITION: ...

LIGHTING: ...

ENVIRONMENT: ...

KEY ELEMENTS: ...

COLOR: ...

PERIOD DETAILS: ...

REMINDER: ...

Results and Next Steps

The structured → parsed → natural-language method has significantly improved image quality inside TemporaMap. The system now produces outputs that are more consistent, more realistic, and more faithful to the intended historical atmosphere.

While further optimization is ongoing, this workflow has already proven how powerful dynamic prompt engineering can be, especially in scenarios where context changes for every generation.

Anyone working with AI image generation or building adaptive prompt systems may find strong value in combining structured metadata with natural-language prompt reconstruction.

If you’d like to explore TemporaMap or see these improvements in action, visit:

👉 https://temporamap.com

Generated in Saint Petersburg, 1905